Leveraging the Cloud to Improve Hospital Occupancy Planning

Trillium Health Partners (THP) Institute for Better Health (IBH) develops a prediction algorithm to improve hospital capacity forecasting and better match bed supply with patient demand.

Bed occupancy forecasting, a process that involves analyzing admission and discharge data, has traditionally been done mostly manually. By leveraging cloud computing, the process has been sped up significantly, and the resulting modeling helps staff to quickly identify an expected increase in demand for beds, and to respond accordingly. THP IBH, in collaboration with the UBC Cloud Innovation Centre (UBC CIC), develops a prototype to improve the hospitals’ two-to-seven-day capacity forecasting using Amazon Web Services (AWS).

Approach

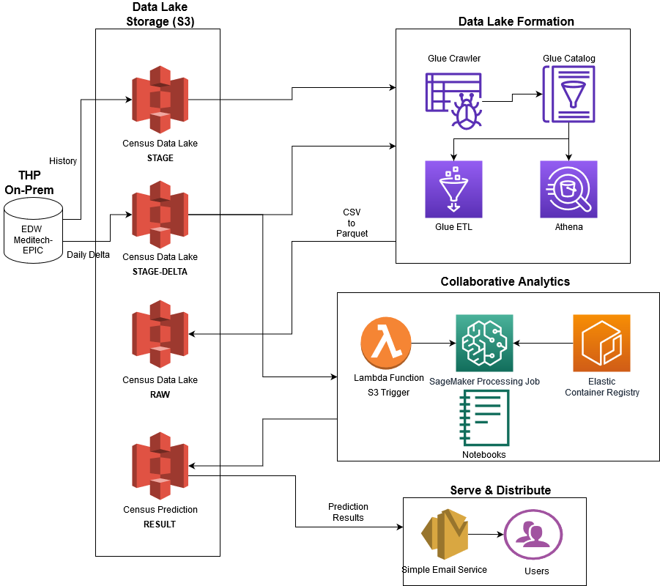

Using AWS Lake Formation, THP built a solution that imports both raw and transformed on-prem data from multiple sources into a centralized repository called a data lake, then automatically extracts the data and dynamically identifies its schema in order to create a data catalog. From here, the data team is able to identify any gaps in the data.

By having the data and its management centralized in the data lake, the collaboration between researchers, data-scientists and any other team who needs to solve business problems is simplified. This solution demonstrates how THP might support operational decision makers requirement quickly and reliably, as it occurred with the prediction of patient census to support the management of capacity.

Technical Details

To build the prototype census prediction algorithm, the team first identified all data sources, and data elements to do a one time extraction of all necessary historical data, and stored the data in Amazon S3 using four buckets for each stage of the data processing.

1. Stage (where source data first gets loaded)

2. Raw (sourced data has undergone some basic transformation)

3. Trusted (exploration zone)

4. Refined (production zone)

The AWS Glue crawlers create the catalog of all of our data assets. The glue catalogue is responsible for discoverability capabilities where data dictionaries can be created, sensitive data tagged, in an easily searchable manner. As an additional benefit, this also allowed the data to be queryable and for analysts to be able to write SQL against using Amazon Athena.

Moving into the next step, Glue ETL jobs transform data between the various zones of the data lake. Specifically, glue ETL jobs were used to transform data between the raw and the staging zone where input csv files were ingested and parquet files were created.

SageMaker studio hosted Jupyter Notebook / Jupyter Lab, let the team easily connect to S3, and read data from it as if work was being done locally. While building the initial prototype on premise the team selected the SARIMA model as the best performing model and decided to use it as our approach.

A SageMaker Processing Job loads a container image from the registry, moves the required data from S3 into a specific directory in the container, where the python script ingests the data. The script runs, outputting the predictions to a specific directory after which the SageMaker Processing Job grabs it and uploads it back to S3.

In the last step, a lambda function was used to trigger the processing jobs. The lambda function listens to events in the data lake. Once new data is available for prediction, it creates a processing job. The processing job loads the data from S3, and saves the predictions to S3.

To send the final predictions to users a pdf report was sent to the stakeholders using Amazon’s SES. It contained the predicted findings using plotly and matplotlib.

Architecture Diagram

THP IBH wrote a blog post with further details on the solution, available here.

Press Release

Trillium Health Partners Press Release.

Photo used with permission from Trillium Health Partners.

About the University of British Columbia Cloud Innovation Centre (UBC CIC)

The UBC CIC is a public-private collaboration between UBC and Amazon Web Services (AWS). A CIC identifies digital transformation challenges, the problems or opportunities that matter to the community, and provides subject matter expertise and CIC leadership.

Using Amazon’s innovation methodology, dedicated UBC and AWS CIC staff work with students, staff and faculty, as well as community, government or not-for-profit organizations to define challenges, to engage with subject matter experts, to identify a solution, and to build a Proof of Concept (PoC). Through co-op and work-integrated learning, students also have an opportunity to learn new skills which they will later be able to apply in the workforce.