Accelerating Computational Chemistry Research with the Cloud Phase II

Project phases

- Phase 1 - Accelerating Computational Chemistry Research with the Cloud

- Phase 2 - Accelerating Computational Chemistry Research with the Cloud Phase II (current page)

Faculty members at the University of British Columbia (UBC) who conduct research in Computational Chemistry engage in work that involves running complex calculations and computer simulations to understand and predict molecular behavior, but they saw the need for improvement as the current program’s process is time-consuming and requires manual and continuous oversight. The researchers collaborated with the UBC CIC to develop a solution that migrates their program to the AWS cloud to optimize the program’s process. The project’s outcome allows greater control, flexibility, and efficiency of the researchers’ work.

Continuing the collaboration between the Computational Chemistry researchers and the UBC CIC, an additional phase was undertaken to further enhance the solution prototype. With the program prototype already existing on the AWS cloud, the prototype was further developed in the second phase to enable cost control and maximize performance efficiency while maintaining the same task management functions as before.

Approach

Currently, the program the UBC Computational Chemistry researchers use sometimes requires days to complete a calculation or simulation, does not display the status of calculations during execution, and is manually maintained by one person. The UBC CIC approached the challenge by developing a solution that runs the program on the AWS cloud. The solution removes the existing limitations and gives researchers increased efficiency and control of their work.

In the project’s initial phase, a prototype solution that uses a command-line interface (CLI) to list, manage, and expunge data was developed. Increased flexibility for the researchers was achieved as the solution prototype allowed calculations to be divided and run at the same time. It also indicated the status of calculations to let users determine if it will run, fail, or if they would like to abort. Due to the size of the project, the additional development required a second phase, which targeted three goals: 1) Improving the performance by reducing the overhead tasks, 2) Minimizing the cost by having control over the resource auto scaling and resource type, and 3) Maintaining control over the tasks in the pipeline.

Technical Details

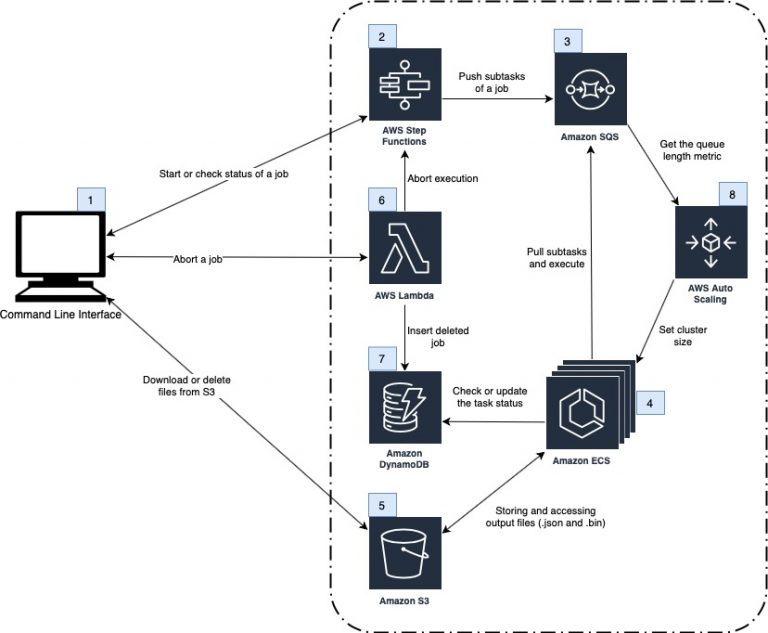

Users interact with the solution using a command-line interface (CLI) that supports specific inputs related to calculation jobs. These inputs allow users to do the following:

- execute a job,

- check the status of a job,

- list all jobs and their statuses,

- abort a job that is running,

- delete all the files related to a job ID from the S3 bucket, and

- download all the files related to a job ID to the local computer running the CLI.

These inputs trigger the step functions that run the calculations, which in turn access an Amazon S3 Bucket to store and pull .bin and .json files for the calculations.

The user will enter an input in the CLI and the solution instantiates an AWS Step Function first that orchestrates different tasks of the submitted job. The tasks get pushed to an Amazon SQS queue. The execution units that are defined using the AWS Elastic Container Service (ECS) poll the queue and execute any existing tasks periodically.

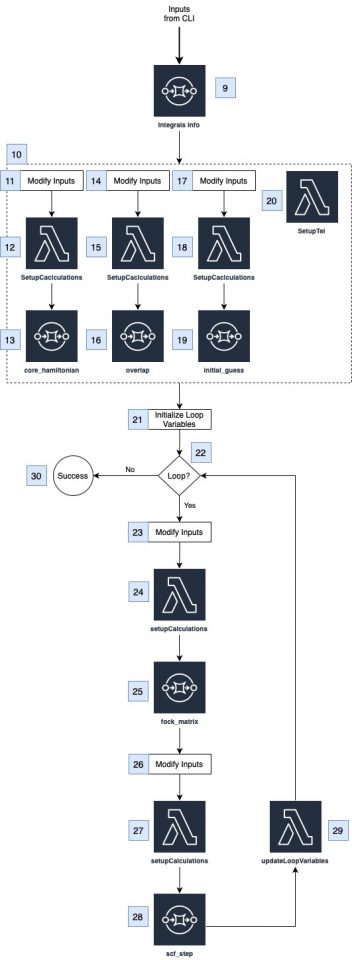

The first task to run in the step function is an info step so that the result can be stored in the S3 bucket as a .json file. From there, four calculations are run in parallel: the core_hamiltonian step, the overlap step, the initial_guess step, and the two_electrons_integrals step. The core_hamiltonian step, the overlap step, and the initial_guess step follow similar processes where a name is added to the input, a setupCalculations Lambda function sets up the parameters to run the step, and an ECS task is run to execute the step, which generates a standard output and a calculation result to store as a .json file and a .bin file respectively in the S3 bucket.

The two_electron_integrals step follows a different process which uses the info step result to get the calculation’s basis_set_instance_size value and prepares to split the calculation into parts using the numSlices value, which can be specified by the user in the CLI or automatically. The program pushes all the integral parts into the queue and waits for the completion of all of them.

After the parallel executions, the program enters a loop to refine the final answer. The inputs have a loopData dictionary added to track the number of iterations calculated. The loop will terminate once a number of iterations has reached a limit or the value of the hartree_fock_energy falls below a threshold value, both of which can be specified in the CLI or use a default value. The fock_matrix step and the scf_step are run using the same process as the core_hamiltonian step, the overlap step, and the initial_guess step, with standard outputs stored as .json files and calculation results stored as .bin files in the S3 bucket. The loopData dictionary updates, and once the maximum number of interactions or the minimum value of hartree_diff is reached, the loop terminates and the execution is marked as successful.

To abort a job while it is still running, the CLI invokes an AWS Lambda function that will abort the execution of the step function and also mark the job as deleted in the job status table in the Amazon DynamoDB.

Link to solution on GitHub: https://github.com/UBC-CIC/electron-repulsion

Acknowledgements

Chen Greif

Ury Segal

Mark Thachuk

Alexandra Bunger

Photo by: thisisengineering on Unsplash

About the University of British Columbia Cloud Innovation Centre (UBC CIC)

The UBC CIC is a public-private collaboration between UBC and Amazon Web Services (AWS). A CIC identifies digital transformation challenges, the problems or opportunities that matter to the community, and provides subject matter expertise and CIC leadership.

Using Amazon’s innovation methodology, dedicated UBC and AWS CIC staff work with students, staff and faculty, as well as community, government or not-for-profit organizations to define challenges, to engage with subject matter experts, to identify a solution, and to build a Proof of Concept (PoC). Through co-op and work-integrated learning, students also have an opportunity to learn new skills which they will later be able to apply in the workforce.